How to install DiagnosisTools on my Synology DiskStation 411, or: Why I love the Internet

Synology Inc. https://www.synology.com/img/products/detail/DS423/heading.png

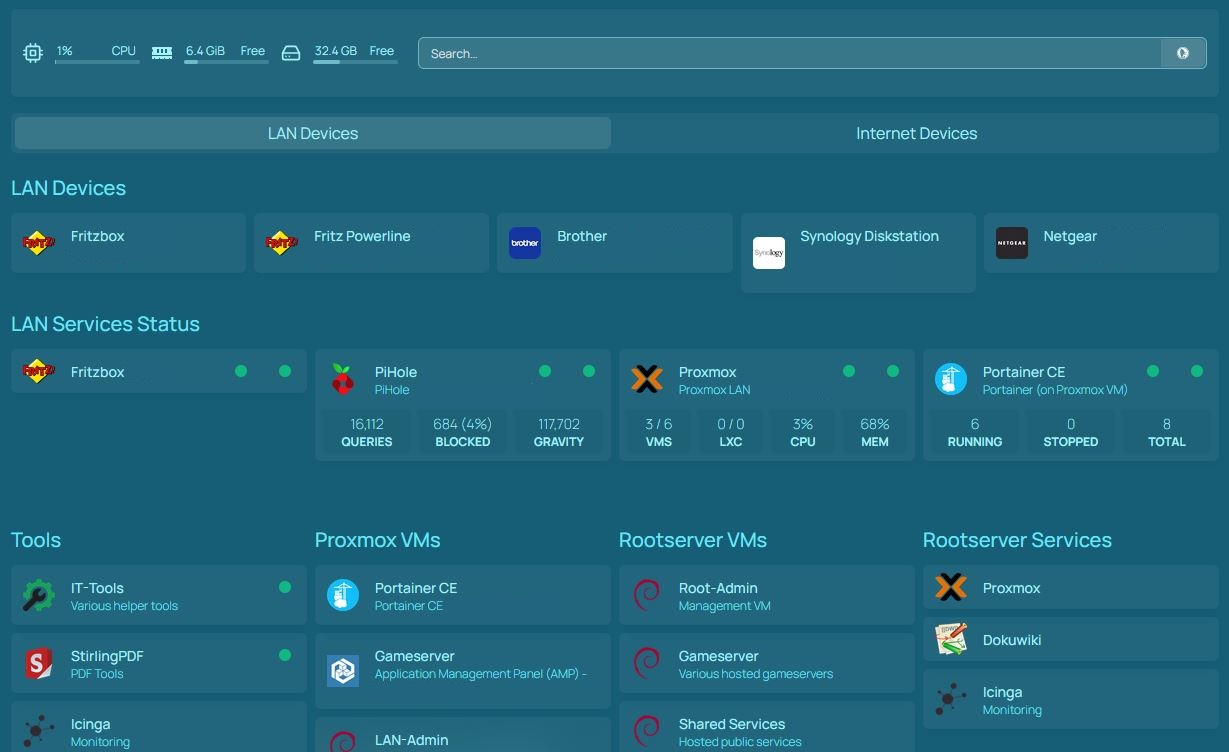

I own a Synology DiskStation 411 - in short: DS411. It looks like the one in the picture - which shows the successor model DS423. The DS411 runs some custom Linux and therefore is missing a lot of common tools. Recently I had some network error which made my NAS unreachable from one of my Proxmox VMs. The debugging process was made harder as I had no tools such as lsof, strace or strings.

I googled a bit and learned that Synology offers a DiagnosisTool package which contains all these tools and more. The package center however showed no such package for me. From the search results I got that it should be shown in the package center if there is a compatible version for the DSM version running on the NAS. So seems there is no compatible version for my DS411 running DSM 6.4.2?

Luckily there is a command to install the tools: synogear install

root@DiskStation:~# synogear install

failed to get DiagnosisTool ... can't parse actual package download link from info fileOkay, that's uncool. But still doesn't explain why we can't install them. What does synogear install actually do? Time to investigate. As we have no stat or whereis we have to resort to command -v which is included in the POSIX standard. (Yes, which is available, but as command is available everywhere that's the better choice.)

root@DiskStation:~# command -v synogear

/usr/syno/bin/synogear

root@DiskStation:~# head /usr/syno/bin/synogear

#!/bin/sh

DEBUG_MODE="no"

TOOL_PATH="/var/packages/DiagnosisTool/target/tool/"

TEMP_PROFILE_DIR="/var/packages/DiagnosisTool/etc/"

STATUS_NOT_INSTALLED=101

STATUS_NOT_LOADED=102

STATUS_LOADED=103

STATUS_REMOVED=104

Nice! /usr/syno/bin/synogear is a simple shellscript. Therefore we can just run it in debug mode and see what is happening without having to read every single line.

root@DiskStation:~# bash -x synogear install

+ DEBUG_MODE=no

+ TOOL_PATH=/var/packages/DiagnosisTool/target/tool/

+ TEMP_PROFILE_DIR=/var/packages/DiagnosisTool/etc/

+ STATUS_NOT_INSTALLED=101

[...]

++ curl -s -L 'https://pkgupdate.synology.com/firmware/v1/get?language=enu&timezone=Amsterdam&unique=synology_88f6282_411&major=6&minor=2&build=25556&package_update_channel=stable&package=DiagnosisTool'

+ reference_json='{"package":{}}'

++ echo '{"package":{}}'

++ jq -e -r '.["package"]["link"]'

+ download_url=null

+ echo 'failed to get DiagnosisTool ... can'\''t parse actual package download link from info file'

failed to get DiagnosisTool ... can't parse actual package download link from info file

+ return 255

+ return 255

+ status=255

+ '[' 255 '!=' 102 ']'

+ return 1

root@DiskStation:~#The main problem seems to be an empty JSON-Response from https://pkgupdate.synology.com/firmware/v1/get?language=enu&timezone=Amsterdam&unique=synology_88f6282_411&major=6&minor=2&build=25556&package_update_channel=stable&package=DiagnosisTool and opening that URL in a browser confirms it. So there really seems to be no package for my Model and DSM version combination.

Through my search I also learned that there is a package archive at https://archive.synology.com/download/Package/DiagnosisTool/ which listed several versions of the DiagnosisTool. One package for each CPU architecture. But that also didn't give many clues as I wasn't familiar with many of the CPU architectures and nothing seems to match my CPU. No Feroceon or 88FR131 or the like.

root@DiskStation:~# cat /proc/cpuinfo

Processor : Feroceon 88FR131 rev 1 (v5l)

BogoMIPS : 1589.24

Features : swp half thumb fastmult edsp

CPU implementer : 0x56

CPU architecture: 5TE

CPU variant : 0x2

CPU part : 0x131

CPU revision : 1

Hardware : Synology 6282 board

Revision : 0000

Serial : 0000000000000000As I didn't want to randomly install all sorts of packages for different CPU architectures - not knowing how good or bad Synology is in preventing the installation of non-matching packages, I opted for the r/synology subreddit and stopped my side-quest at this point, focusing on the main problem.

Random redditors to the rescue!

Nothing much happened for 2 months and I had already forgotten about that thread. My problem with the VM was solved in the meantime and I had no reason to pursue it any further.

Then someone replied. This person apparently did try all sorts of packages for the DiskStation additionally providing a link to the package that worked. It was there that I noticed something. The link provided was: https://global.synologydownload.com/download/Package/spk/DiagnosisTool/1.1-0112/DiagnosisTool-88f628x-1.1-0112.spk and I recognized the string 88f628x but couldn't pin down where I had spotted it. Only then it dawned on me: 88f for the Feroceon 88FR131 and 628x for all Synology 628x boards. Could this really be it?

Armed with this information I quickly identified https://global.synologydownload.com/download/Package/spk/DiagnosisTool/3.0.1-3008/DiagnosisTool-88f628x-3.0.1-3008.spk to be the last version for my DS411 and installed it via the package center GUI (button "Manual installation" and then navigated to the downloaded .spk file on my computer).

Note: The .spk file seems to be a normal compressed tar file and can be opened with the usual tools. The file structure inside is roughly the same as with any RPM or DEB package. Making it easy to understand what happens during/after package installation.

The installation went fine, no errors reported and after that synogear install worked:

root@DiskStation:~# synogear install

Tools are installed and ready to use.

DiagnosisTool version: 3.0.1-3008And I was finally able to use lsof!

root@DiskStation:~# lsof -v

lsof version information:

revision: 4.89

latest revision: ftp://lsof.itap.purdue.edu/pub/tools/unix/lsof/

latest FAQ: ftp://lsof.itap.purdue.edu/pub/tools/unix/lsof/FAQ

latest man page: ftp://lsof.itap.purdue.edu/pub/tools/unix/lsof/lsof_man

constructed: Mon Jan 20 18:58:20 CST 2020

compiler: /usr/local/arm-marvell-linux-gnueabi/bin/arm-marvell-linux-gnueabi-ccache-gcc

compiler version: 4.6.4

compiler flags: -DSYNOPLAT_F_ARMV5 -O2 -mcpu=marvell-f -include /usr/syno/include/platformconfig.h -DSYNO_ENVIRONMENT -DBUILD_ARCH=32 -D_LARGEFILE64_SOURCE -D_FILE_OFFSET_BITS=64 -g -DSDK_VER_MIN_REQUIRED=600 -pipe -fstack-protector --param=ssp-buffer-size=4 -Wformat -Wformat-security -D_FORTIFY_SOURCE=2 -O2 -Wno-unused-result -DNETLINK_SOCK_DIAG=4 -DLINUXV=26032 -DGLIBCV=215 -DHASIPv6 -DNEEDS_NETINET_TCPH -D_FILE_OFFSET_BITS=64 -D_LARGEFILE64_SOURCE -DLSOF_ARCH="arm" -DLSOF_VSTR="2.6.32" -I/usr/local/arm-marvell-linux-gnueabi/arm-marvell-linux-gnueabi/libc/usr/include -I/usr/local/arm-marvell-linux-gnueabi/arm-marvell-linux-gnueabi/libc/usr/include -O

loader flags: -L./lib -llsof

Anyone can list all files.

/dev warnings are disabled.

Kernel ID check is disabled.The redditor was also so nice to let me know that I have to execute synogear install each time before I can use these tools. Huh? Why that? Shouldn't they be in my path?

Turns out: No, the directory /var/packages/DiagnosisTool/target/tool/ isn't included into our PATH environment variable.

root@DiskStation:~# echo $PATH

/sbin:/bin:/usr/sbin:/usr/bin:/usr/syno/sbin:/usr/syno/bin:/usr/local/sbin:/usr/local/binsynogear install does that. It copies /etc/profile to /var/packages/DiagnosisTool/etc/.profile, while removing all lines starting with PATH or export PATH. Adding the path to the tools directory to the new PATH and exporting that and setting the new .profile file in the ENV environment variable.

Most likely this is just a precaution for novice users.

And to check if the tools are "loaded" they grep if /var/packages/DiagnosisTool/target/tool/ is included in $PATH.

So yeah, there is no technical reason preventing us from just adding /var/packages/DiagnosisTool/target/tool/ to $PATH and be done with that.

And this is why I love the internet. I thought I would've never figured that out if not for someone to post an reply and included the file which worked.

New learnings

Synology mailed me about critical security vulnerabilities present in DSM 6.2.4-25556 Update 7 which are fixed in 6.2.4-25556 Update 8 (read the Release Notes). However the update wasn't offered to me via the normal update dialog in the DSM, as it is a staged rollout. Therefore I opted to download a patch file from the Synology support site. This means I did not download the whole DSM package for a specific version but just from one version to another. And here I noticed that the architecture name is included in the patch filename. Nice.

If you visit https://www.synology.com/en-global/support/download/DS411?version=6.2 do not just click on Download, instead opt to choose your current DSM version and the target version you wish to update to. Then you are offered a patch file and identifier/name for the architecture your DiskStation uses is part of the filename.

This should be a reliable way to identify the architecture for all Synology models - in case it isn't clear through the CPU/board name, etc. as in my case.

List of included tools

Someone ask me for a list of all tools which are included in the DiagnosesTool package. Here you go:

admin@DiskStation:~$ ls -l /var/packages/DiagnosisTool/target/tool/ | awk '{print $9}'

addr2line

addr2name

ar

arping

as

autojump

capsh

c++filt

cifsiostat

clockdiff

dig

domain_test.sh

elfedit

eu-addr2line

eu-ar

eu-elfcmp

eu-elfcompress

eu-elflint

eu-findtextrel

eu-make-debug-archive

eu-nm

eu-objdump

eu-ranlib

eu-readelf

eu-size

eu-stack

eu-strings

eu-strip

eu-unstrip

file

fio

fix_idmap.sh

free

gcore

gdb

gdbserver

getcap

getpcaps

gprof

iftop

iostat

iotop

iperf

iperf3

kill

killall

ld

ld.bfd

ldd

log-analyzer.sh

lsof

ltrace

mpstat

name2addr

ncat

ndisc6

nethogs

nm

nmap

nping

nslookup

objcopy

objdump

perf-check.py

pgrep

pidof

pidstat

ping

ping6

pkill

pmap

ps

pstree

pwdx

ranlib

rarpd

rdisc

rdisc6

readelf

rltraceroute6

run

sa1

sa2

sadc

sadf

sar

setcap

sid2ugid.sh

size

slabtop

sockstat

speedtest-cli.py

strace

strings

strip

sysctl

sysstat

tcpdump_wrapper

tcpspray

tcpspray6

tcptraceroute6

telnet

tload

tmux

top

tracepath

traceroute6

tracert6

uptime

vmstat

w

watch

zblacklist

zmap

ztee