The 11th commandment: Thou shalt not copy from the artificious intellect without understanding

It happened again. Someone asked an LLM a benign technical question. "How to check the speed of a hard drive?"

And the LLM answered!

Only the human wasn't clever enough to understand the answer. Nor was it particularly careful in formulating the question. Certain details were left out, critical information was not recognised as such. The human was too greedy to acquire this long-sought knowledge.

But... Is an answer to a thoughtlessly asked question a reliable & helpful one?

The human didn't care. He copy & pasted the command directly into the root shell of his machine.

The sacred machine spirit awakened to life and fulfilled its divine duty.

And... It actually gave the human the answer he was looking for. Only later did the human learn that the machine had given him even more. The machine spirit answered the question, "How quickly can the hard drive overwrite all my cherished memories from 20 years ago that I never backed up?"

TL;DR: r/selfhosted: TIFU by copypasting code from AI. Lost 20 years of memories

People! Make backups! Never, ever work on your only, single, lifetime copy of your data while executing potentially harmful commands. Jesus! Why do so many people fail to grasp this? I don't get it..

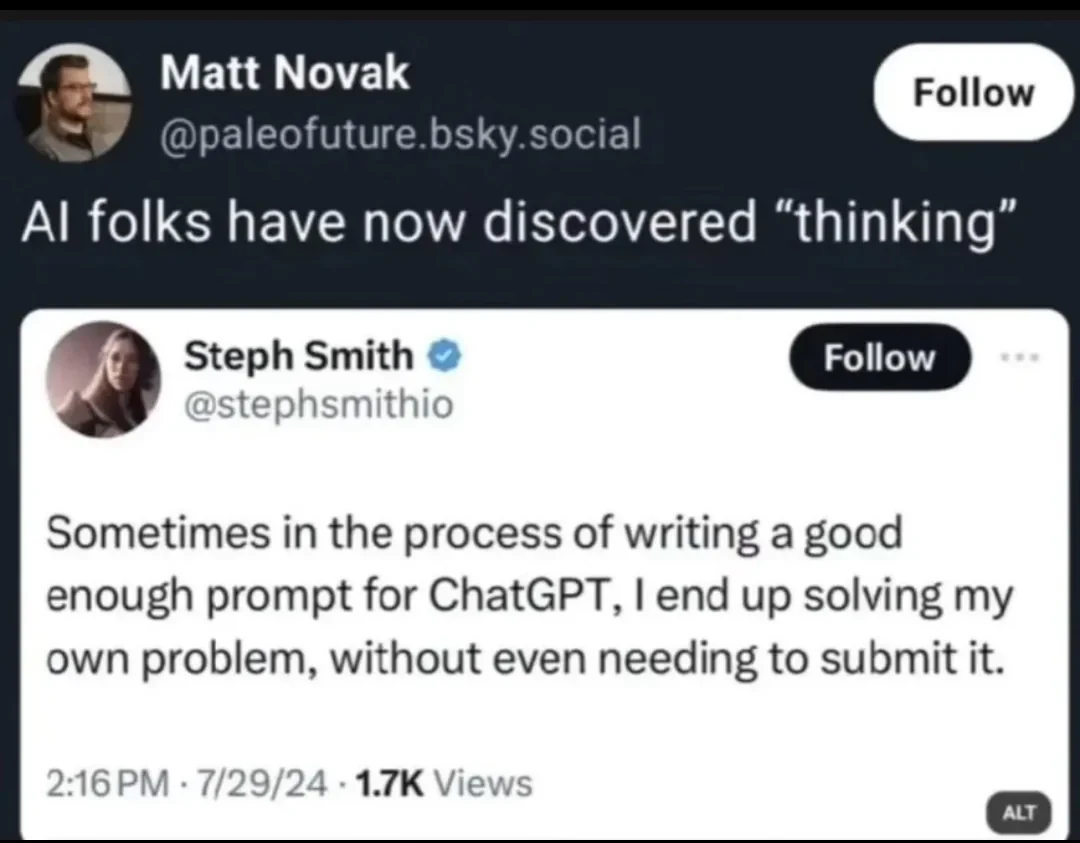

And as for AI tools like ChatGPT, DeepSeek & others: Yes, they can be great & useful. But they don't understand. They have no sentience. They can't comprehend. They have no understanding of syntax or semantics. And therefore they can't check if the two match. ChatGPT won't notice that the answer it gives you doesn't match the question. Hell, there are enough people out there who won't notice. YOU have to think for the LLM! YOU have to give the full, complete context. Anything left out will not be considered in the answer.

In addition: Pick a topic you're well-versed in. Do you know how much just plain wrong stuff there is on the internet about that subject? Exactly.

Now ask yourself: Why should it be any different in a field you know nothing about?

All the AI companies have just pirated the whole internet. Copied & absorbed what they could in the vague hope of getting that sweet, sweet venture capital money. Every technically incorrect solution. Every "easy fix" that says "just do a chmod -R 777 * and it works".

And you just copy and paste that into your terminal?

If an LLM gives you an answer you do the following:

- You ask the LLM to check if the answer is correct. Oh, yes. You will be surprised how often an LLM will correct itself.

- And we haven't even touched the topic of hallucinations...

- Then you find the documentation/manpage for that command

- You read said documentation/manpage(s) until you understand what the code/command does. How it works

- Now you may execute that command

Sounds like too much work? Yeah.. About that...