Little helper scripts - Part 2: automation.sh / automation2.sh

Part 1 of this series is here: Little helper scripts - Part 1: no-screenlock-during-meeting.ps1 or use the following tag-link: https://admin.brennt.net/tag/littlehelperscripts

This script is no rocket science. Nothing spectacular. But the amount of hours it saved me in various projects is astonishing.

The sad reality

There are far too many companies (even IT-focused companies!) out there who have a very low level of automation. Virtual machines are created by hand - not by some script using an API. Configurations are not deployed via of some Configuration Management software like Puppet/OpenVox/Chef/Ansible or a Runbook automation software like Rundeck - no, they are handcrafted. Bespoke. System administration like it's 1753. With all the implications and drawbacks that brings.

Containerisation? Yeah.. Well.. "A few docker containers here and there but nobody in the company really knows how that stuff works so we leave it alone" is a phrase I have heard more than a few times. Either directly, or reported from colleagues working in other companies.

This means that I have to log on to systems manually and execute commands by hand. Something I can do and do regularly in my home lab. But to do it for dozens or even hundreds of systems? Yeah... No. Sorry, I've got better things to do. And as an external consultant, the client always keeps an eye on my performance metrics. After all, they are paying my employer a lot of money for my services. Sitting there all day and getting paid to copy and paste commands? It doesn't look good on my performance reporting spreadsheet and it doesn't meet my personal standards of what a consultant should be able to deliver.

I'm just a guest

Scripting to the rescue!

First I went with the cheap & easy solution of for server in hosta hostb hostc; do ssh user@$server "command --some-parameter bla"; done but I grew tired of writing it all completely anew for each task.

Natively systems are often grouped into categories (webservers, etc.) or perform the same tasks (think of clusters). Hence commands must be executed on the same set of hosts again and again. One of my colleagues already compiled lists of hostnames group by tasks, roles and installed software. As some systems had the same software installed but were just configured to do different tasks with that software.

Through these list I got an idea: Why not feed those into a for or do-while loop and be done?

In the end I added some safety & DNS checks and named the script automation.sh. Later I added the capability to log the output on each host and named the script automation2.sh, which can be viewed below.

Yes, it's just a glorified nesting of if-statements but the amount of time this script saved me is insane. And as it utilizes only basic Posix & Bash commands I've yet to find a system were it can't be executed.

As always: Please check my GitHub for the most recent version as I won't update the script shown in this article.

#!/bin/bash

# vim: set tabstop=2 smarttab shiftwidth=2 softtabstop=2 expandtab foldmethod=syntax :

#

# Small script to automate custom shell command execution

# Current version can be found here:

# https://github.com/ChrLau/scripts/blob/master/automation2.sh

# Bash strict mode

# read: http://redsymbol.net/articles/unofficial-bash-strict-mode/

set -euo pipefail

IFS=$'\n\t'

# Set pipefail variable

# As we use "ssh command | tee" and tee will always succeed our check for non-zero exit-codes doesn't work

#

# The exit status of a pipeline is the exit status of the last command in the pipeline,

# unless the pipefail option is enabled (see: The Set Builtin).

# If pipefail is enabled, the pipeline's return status is the value of the last (rightmost)

# command to exit with a non-zero status, or zero if all commands exit successfully.

VERSION="1.6"

SCRIPT="$(basename "$0")"

SSH="$(command -v ssh)"

TEE="$(command -v tee)"

# Colored output

RED="\e[31m"

GREEN="\e[32m"

ENDCOLOR="\e[0m"

# Test if ssh is present and executeable

if [ ! -x "$SSH" ]; then

echo "${RED}This script requires ssh to connect to the servers. Exiting.${ENDCOLOR}"

exit 2;

fi

# Test if tee is present and executeable

if [ ! -x "$TEE" ]; then

echo "${RED}tee not found.${ENDCOLOR} ${GREEN}Script can still be used,${ENDCOLOR} ${RED}but option -w CAN NOT be used.${ENDCOLOR}"

fi

function HELP {

echo "$SCRIPT $VERSION: Execute custom shell commands on lists of hosts"

echo "Usage: $SCRIPT -l /path/to/host.list -c \"command\" [-u <user>] [-a <YES|NO>] [-r] [-s \"options\"] [-w \"/path/to/logfile.log\"]"

echo ""

echo "Parameters:"

echo " -l Path to the hostlist file, 1 host per line"

echo " -c The command to execute. Needs to be in double-quotes. Else getops interprets it as separate arguments"

echo " -u (Optional) The user used during SSH-Connection. (Default: \$USER)"

echo " -a (Optional) Abort when the ssh-command fails? Use YES or NO (Default: YES)"

echo " -r (Optional) When given command will be executed via 'sudo su -c'"

echo " -s (Optional) Any SSH parameters you want to specify Needs to be in double-quotes. (Default: empty)"

echo " Example: -s \"-i /home/user/.ssh/id_user\""

echo " -w (Optional) Write STDERR and STDOUT to logfile (on the machine where $SCRIPT is executed)"

echo ""

echo "No arguments or -h will print this help."

exit 0;

}

# Print help if no arguments are given

if [ "$#" -eq 0 ]; then

HELP

fi

# Parse arguments

while getopts ":l:c:u:a:hrs:w:" OPTION; do

case "$OPTION" in

l)

HOSTLIST="${OPTARG}"

;;

c)

COMMAND="${OPTARG}"

;;

u)

SSH_USER="${OPTARG}"

;;

a)

ABORT="${OPTARG}"

;;

r)

SUDO="YES"

;;

s)

SSH_PARAMS="${OPTARG}"

;;

w)

LOGFILE="${OPTARG}"

;;

h)

HELP

;;

*)

HELP

;;

# Not needed as we use : as starting char in getopts string

# :)

# echo "Missing argument"

# ;;

# \?)

# echo "Invalid option"

# exit 1

# ;;

esac

done

# Give usage message and print help if both arguments are empty

if [ -z "$HOSTLIST" ] || [ -z "$COMMAND" ]; then

echo "You need to specify -l and -c. Exiting."

exit 1;

fi

# Check if username was provided, if not use $USER environment variable

if [ -z "$SSH_USER" ]; then

SSH_USER="$USER"

fi

# Check for YES or NO

if [ -z "$ABORT" ]; then

# If empty, set to YES (default)

ABORT="YES"

# Check if it's not NO or YES - we want to ensure a definite decision here

elif [ "$ABORT" != "NO" ] && [ "$ABORT" != "YES" ]; then

echo "-a accepts either YES or NO (case-sensitive)"

exit 1

fi

# If variable logfile is not empty

if [ -n "$LOGFILE" ]; then

# Check if logfile is not present

if [ ! -e "$LOGFILE" ]; then

# Check if creating it was unsuccessful

if ! touch "$LOGFILE"; then

echo "${RED}Could not create logfile at $LOGFILE. Aborting. Please check permissions.${ENDCOLOR}"

exit 1

fi

# When logfile is present..

else

# Check if it's writeable and abort when not

if [ ! -w "$LOGFILE" ]; then

echo "${RED}$LOGFILE is NOT writeable. Aborting. Please check permissions.${ENDCOLOR}"

exit 1

fi

fi

fi

# Execute command via sudo or not?

if [ "$SUDO" = "YES" ]; then

COMMANDPART="sudo su -c '${COMMAND}'"

else

COMMANDPART="${COMMAND}"

fi

# Check if hostlist is readable

if [ -r "$HOSTLIST" ]; then

# Check that hostlist is not 0 bytes

if [ -s "$HOSTLIST" ]; then

while IFS= read -r HOST

do

getent hosts "$HOST" &> /dev/null

# getent returns exit code of 2 if a hostname isn't resolving

# shellcheck disable=SC2181

if [ "$?" -ne 0 ]; then

echo -e "${RED}Host: $HOST is not resolving. Typo? Aborting.${ENDCOLOR}"

exit 2

fi

# Log STDERR and STDOUT to $LOGFILE if specified

if [ -n "$LOGFILE" ]; then

echo -e "${GREEN}Connecting to $HOST ...${ENDCOLOR}" 2>&1 | tee -a "$LOGFILE"

ssh -n -o ConnectTimeout=10 "${SSH_PARAMS}" "$SSH_USER"@"$HOST" "${COMMANDPART}" 2>&1 | tee -a "$LOGFILE"

# Test if ssh-command was successful

# shellcheck disable=SC2181

if [ "$?" -ne 0 ]; then

echo -n -e "${RED}Command was NOT successful on $HOST ... ${ENDCOLOR}" 2>&1 | tee -a "$LOGFILE"

# Shall we proceed or not?

if [ "$ABORT" = "YES" ]; then

echo -n -e "${RED}Aborting.${ENDCOLOR}\n" 2>&1 | tee -a "$LOGFILE"

exit 1

else

echo -n -e "${GREEN}Proceeding, as configured.${ENDCOLOR}\n" 2>&1 | tee -a "$LOGFILE"

fi

fi

else

echo -e "${GREEN}Connecting to $HOST ...${ENDCOLOR}"

ssh -n -o ConnectTimeout=10 "${SSH_PARAMS}" "$SSH_USER"@"$HOST" "${COMMANDPART}"

# Test if ssh-command was successful

# shellcheck disable=SC2181

if [ "$?" -ne 0 ]; then

echo -n -e "${RED}Command was NOT successful on $HOST ... ${ENDCOLOR}"

# Shall we proceed or not?

if [ "$ABORT" = "YES" ]; then

echo -n -e "${RED}Aborting.${ENDCOLOR}\n"

exit 1

else

echo -n -e "${GREEN}Proceeding, as configured.${ENDCOLOR}\n"

fi

fi

fi

done < "$HOSTLIST"

else

echo -e "${RED}Hostlist \"$HOSTLIST\" is empty. Exiting.${ENDCOLOR}"

exit 1

fi

else

echo -e "${RED}Hostlist \"$HOSTLIST\" is not readable. Exiting.${ENDCOLOR}"

exit 1

fi

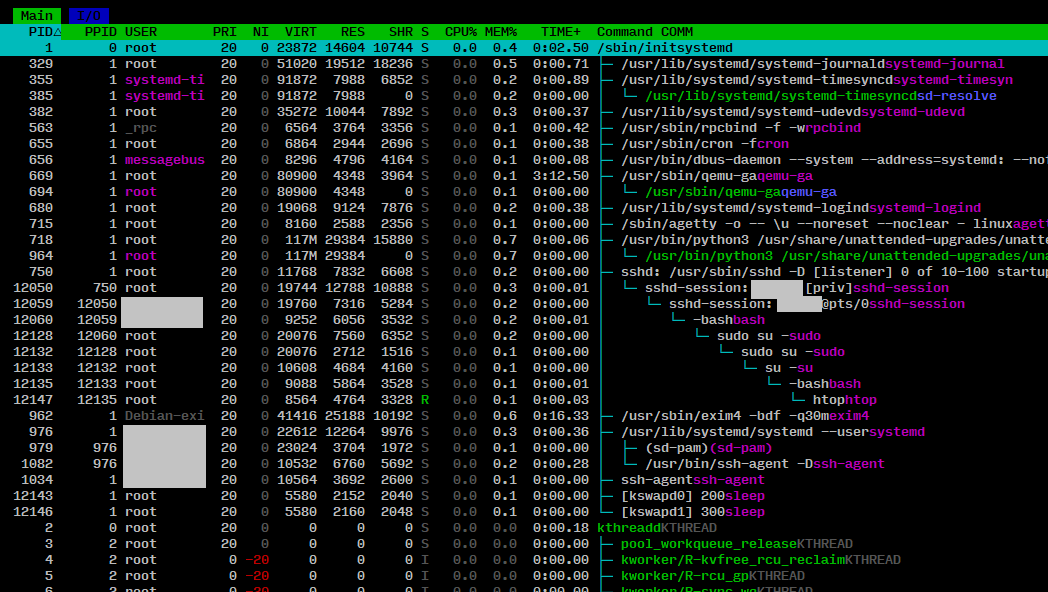

Click to enlarge in a new window.

Click to enlarge in a new window.