Quit your shitty storytelling, or: How to spot a dropshipper manipulating your emotions and trick you into buying their overpriced garbage

YouTube showed me a short. In it, a young girl of colour could be seen. She was devastated over some online comments that people don't like her, handcrafted, fantasy chalices. For the sake of this text I ask you to watch the video now. The link is:

https://www.youtube.com/watch?v=aaMCGFH9Fvc

Have you watched the video? Good.

What did you take away from it? Initially, I thought the following:

- A girl is handcrafting fantasy goblets and chalices

- She received negative comments and reviews because she is a person of colour and people didn't appreciate her work

- Now, she is trying to make a living by selling them via her website

Do you agree with me? Good.

And yes, there is many more to say about this video. For example how it is cut, that it's in reality consisting out of 3 or more videos. The hands of the person do change several times, etc. This too should give more then one hint that something is shaddy. But I keep that out here, for the sake of shortness.

Lesson 1: Always look for the imprint

I always look for the imprint before buying something. I want to know who I'm buying from. More importantly: From where? All too often, low-quality products are presented and marketed as high-quality luxury items, because they can be sold at a much higher price.

The YouTube channel is https://www.youtube.com/@DungeonChalices. If you visit their channel's main page, you will see a link to https://www.dungeonchalice.com/products/dragon-chalices. When I click on it, I am immediately greeted with a call to action, urging me to buy NOW as there is a limited-time offer: 40% off and free shipping! Wow!

Surely we want to help that poor girl and buy the chalice for 35€, right?

Remember the first lesson: read the imprint.

But there isn't one listed. Strange. If we click on the company name at the bottom of each page, we are directed to an anonymous contact form. We can provide our contact details (name, email address and message), but we are not told who this email will be sent to. We still have no postal address, company name, or anything like that. Strange. Any legitimate company shouldn't have a problem telling us its legal form and where it is registered, should they?

Lesson 2: If there is no imprint, read the Whois data

Fortunately, there is another source of information that we can use. The Domain Name System's Whois data. When someone registers a domain, the company registering it on behalf of the customer must make certain details public. For example, they must provide a contact for administrative or legal issues. We can also see when the domain was first registered and extended.

Nowadays, you won't get the names directly, as Domain Registries typically offer privacy options as standard. In this case, they replace your name and address with details of a legal entity that they own. This is 100% legal, as long as you can be contacted. It doesn't matter whether this is done via proxy (for privacy reasons) or directly.

I used https://lookup.icann.org/en/lookup. And what did I learn from this? The domain dungeonchalice.com was registered on August 31st 2025 at 00:50:46 UTC.

Everything else is redacted, apart from a third-party service which can be used to get in contact with the domain owner. So nothing which answers our initial question.

Uhm, but wait.. Isn't it Monday 1st September 2025? Yep. Isn't it strange that the domain name was only registered a day or so ago? Yes, it is. From my experience in the eCommerce business - I designed parts of the online shop platform for one of Germany's biggest hosting companies - I can say that the domain name is usually registered long before any website or online marketing is done.

Why? As usually the company name is used as the domain name. Or the name of a product or brand. Even a trademark. This means it has to be known beforehand. As logos have to be designed, legal documents may need to include that name, etc.

And more importantly: Designing a website and getting approved by the various online payment providers like PayPal, ApplePay, AmazonPay, Klarna, etc. takes time too. If we look back at that website they offer payment via:

- American Express

- ApplePay

- GooglePay

- Klarna

- Maestro? (Well, they use the Maestro logo, but Maestro itself isn't conducting business anymore...)

- Mastercard

- Shop Pay

- Union Pay

- Visa

No Paypal? Strange. And when we go to checkout even these information don't match. In reality only American Express, Google Pay, Klarna, Mastercard, Shop Pay and Visa are listed.

Anyway there is no way to achieve this in mere hours. There has to be an existing business beforehand which is used to process the payments. Usually this name shows up on our credit card statement or bank records. Alas I'm not going to buy something. Most likely that company name won't help me neither or it's just the name of some other, unrelated third-party, processing the payments for the dropshipper.

Are you still of the opinion that a poor black girl is selling her handcrafted goblets here? - Yeah..

Lesson 3: Use the reverse image search to find better deals

At this point I was pretty sure we deal with a dropshipper who is just very good on the online marketing and story telling part of his/her business. It didn't get better when I noticed that right click was blocked. Yeah, good that Firefox also supports Shift+Right-click to open the context menu. Then I copy&pasted the image address for the "Ashfang" chalice into the google image reverse search.

This URL: https://dungeonchalice.com/cdn/shop/files/Screenshot_2025-08-31_010624.png?v=1756595436&width=823

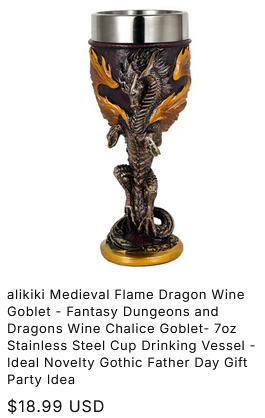

Belonging to this image:

Leads us directly to this shop: https://www.alikiki.net/collections/dragon-goblets

And look! It's our Goblet! Just for half what the other shop would charge us.

In fact the pictures are that identical that I assume the dropshipper just used their product pictures instead of creating some of his/her own.

And to be 100% fair: I even doubt that "alikiki" is the original manufacturer as there are several listings on Amazon selling the same goblet.

Additionally.. Did you Notice the word "Screenshot" in the file name? This too indicates that someone took a picture from another website and used it for their own. After all.. If you manufacture the goods yourself, wouldn't you just upload properly taken product photos?

Lesson 4: A quick Amazon search usually does the job too

This is kind of a shortcut. Reason is: If there is a product that is selling well there are always people who are in for the quick cash. Hence every good selling item on the internet is most likely also listed on Amazon. A short search usually leads to good results and often provides us with cheaper alternatives.

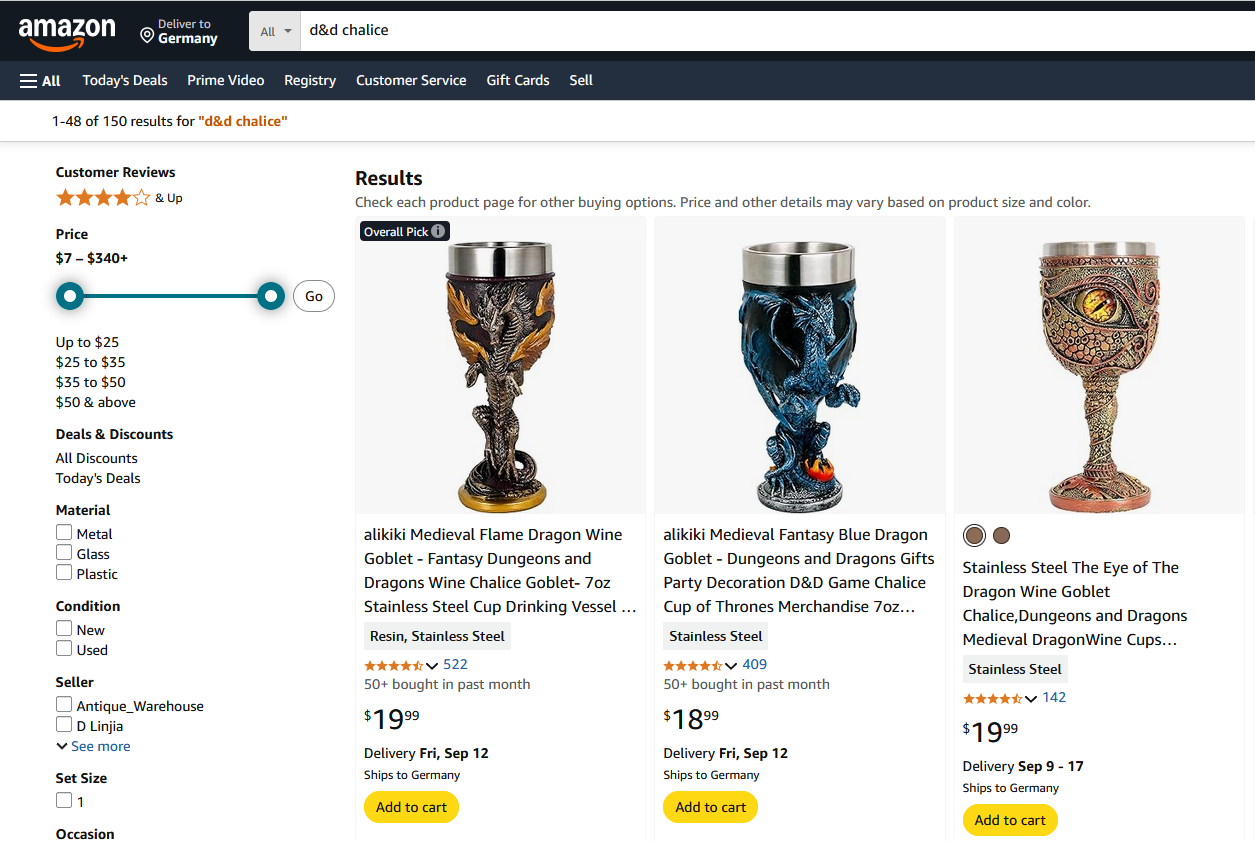

Just like in this case, when I simply search for: d&d chalice

These are just the first three results. The interesting thing is that: The first two goblets are from the shop we found earlier. The third, however, is also sold by the dropshipper, but is from a different manufacturer.

This comes as no surprise, as dropshippers usually target best-selling items.

So, what can we learn from this?

Firstly: Dropshippers should go f**k themselves.

Secondly, never buy something on impulse for emotional reasons just because you saw a stupid YouTube video.

You will be overcharged considerably.