Opinion: fail2ban doesn't increase system security, it's just a mere logfile cleanup tool

Like many IT people, I pay to have my own server for personal projects and self-hosting. As such, I am responsible for securing these systems as they are, of course, connected to the internet and provide services to everyone. Like this blog for example. So I often read about people installing Fail2Ban to "increase the security of their systems".

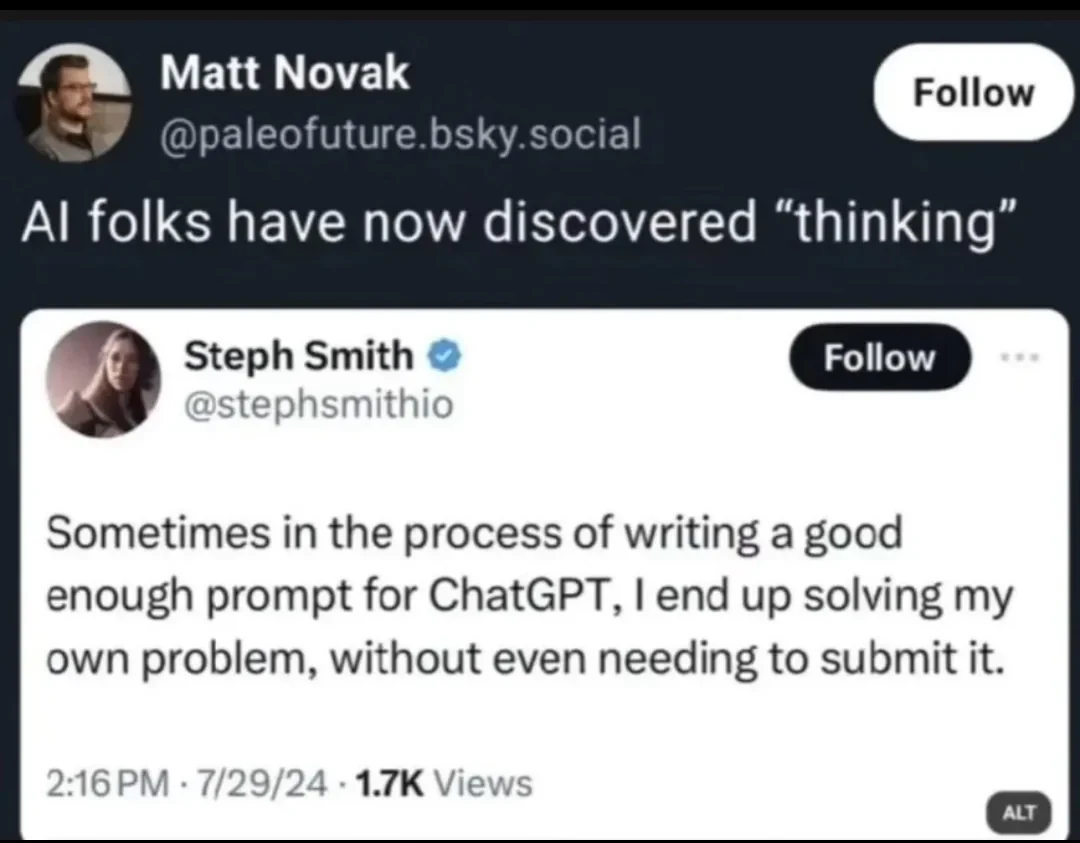

And every time I read this, I am like this popular meme from the TV series Firefly:

As I don't share this view of Fail2Ban - in fact, I'm against the view that it improves security - but I'll keep quiet, knowing that starting this discussion is simply not helpful. Nor that it is wanted.

For me, Fail2Ban is just a log cleanup tool. Its only benefit is that it will catch repeated login attempts and deny them by adding firewall rules to iptables/nftables to block traffic from the offending IPs. This prevents hundreds or thousands of extra logfile lines about unsuccessful login attempts. So it doesn't improve the security of a system, as it doesn't prevent unauthorised access or strengthen authorisation or authentication methods. No, Fail2Ban - by design - can only act when an IP has been seen enough times to trigger an action from Fail2Ban.

With enough luck on the part of the attacker - or negligence on the part of the operator - a login will still succeed. Fail2Ban won't save you if you allow root to login via SSH with the password "root" or "admin" or "toor".

Granted, even Fail2Ban knows this and they write this prominently on their project's GitHub page:

Though Fail2Ban is able to reduce the rate of incorrect authentication attempts, it cannot eliminate the risk presented by weak authentication. Set up services to use only two factor, or public/private authentication mechanisms if you really want to protect services.

Yet, the number of people I see installing Fail2Ban to "improve SSH security" but refusing to use public/private key authentication is staggering.

I only allow public/private key login for select non-root users specified via AllowUsers. Absolutely no password logins allowed. I've changed the SSH port away from port 22/tcp and I don't run Fail2Ban. As with this setup, there are not that many login attempts anyway. And those that do tend to abort pretty early on when they realise that password authentication is disabled.

Although in all honesty: Thanks to services like https://www.shodan.io/ and others finding out the changed SSH port is not a problem. There are dozens of tools that can detect what is running behind a port and act accordingly. Therefore I do see my fair share of SSH bruteforce attempts. Denying password authentication is the real game changer.

So do yourself a favour: Don't rely on Fail2Ban for SSH security. Rely on the following points instead:

- Keep your system up to date! As this will also remove outdated/broken ciphers and add support for new, more secure ones. All the added & improved SSH security gives you nothing if an attacker can gain root privileges via another vulnerability.

AllowUsersorAllowGroups: To only specified users to login in via SSH. This is generally preferred over usingDenyUsersorDenyGroupsas it's generally wiser to specify "what is allowed" as to specify "what is forbidden". As the bad guys are pretty damn good in finding the flaws and holes in the later one.- If you don't see what I mean, read this hilarious article about how "virtual Minecraft Denmark" was blown up with minecarts using TNT: https://www.gamespot.com/articles/danish-government-creates-entire-country-in-minecraft-users-promptly-blow-it-up-and-plant-american-flag/1100-6419412/

- If you don't see what I mean, read this hilarious article about how "virtual Minecraft Denmark" was blown up with minecarts using TNT: https://www.gamespot.com/articles/danish-government-creates-entire-country-in-minecraft-users-promptly-blow-it-up-and-plant-american-flag/1100-6419412/

DenyUsersorDenyGroups: Based on your groups this may be useful too but I try to avoid using this.AuthorizedKeysFile /etc/ssh/authorized_keys/%u: This will place theauthorized_keysfile for each user in the/etc/ssh/authorized_keys/directory. This ensures users can't add public keys by themselves. Only root can.PermitEmptyPasswords no: Should be self-explaining. Is already a default.PasswordAuthentication noandPubkeyAuthentication yes: Disables authentication via password. Enabled authentication via public/private keys.AuthenticationMethods publickey: To only offer publickey authentication. Normally there ispublickey,passwordor the like.PermitRootLogin no: Create a non-root account and use su. Or install sudo and use that if needed. See alsoAllowUsers.