Puppet goes enshittyfication (Updated)

Photo by Pixabay: https://www.pexels.com/photo/computer-screen-turned-on-159299/

This one came unexpected. Puppetlabs, the company behind the configuration management software Puppet, was purchased by Perforce Software in 2022 (and renamed to "Puppet by Perforce") and now, in November 2024 we start to see the fallout of this.

As Puppetlabs announced on November 7th 2024 in a blogpost they are, to use an euphemism: "Moving the Puppet source code in-house."

Or in their words (emphasizes by me):

In early 2025, Puppet will begin to ship any new binaries and packages developed by our team to a private, hardened, and controlled location. Our intention with this change is not to limit community access to Puppet source code, but to address the growing risk of vulnerabilities across all software applications today while continuing to provide the security, support, and stability our customers deserve.

They then go on in length to state why this doesn't affect customers/the community and that the community still can get access to that private, hardened and controlled location via a separate "development license (EULA)". However currently no information about the nature of that EULA is known and information will be released in early 2025 so after the split was made.

To say it bluntly: I call that bullshit. The whole talk around security and supply-chain risks is non-sense. If Puppet really wanted to enhance the technical security of their software product they could have achieved so in a myriad of other ways.

- Like integrating a code scanner into their build pipelines

- Doing regular code audits (with/from external companies)

- Introducing a four-eyes-principle before commits are merged into the main branch

- Participating in bug-bounty programs

- And so on...

There is simply no reason to limit the access to the source-code to achieve that goal. (And I am not even touching the topic of FUD or how open source enables easier & faster spotting of software defects/vulnerabilities.) Therefore this can only be viewed as a straw-man fallacy type of expression to masquerade the real intention. I instead see it as what it truly is: An attempt to maximize revenue. After all Perforce wants to see a return for their investment. And as fair as this is, the "how" lets much to be desired...

We already have some sort-of proof. Ben Ford, a former Puppet employee wrote a blogpost Everything is on fire.... in mid October 2024 stating his negative experience with the executives and vice-presidents of Puppet he made in 2023 when he tried to explain the whole community topic to them (emphasizes by me):

"I know from personal experience with the company that they do not value the community for itself. In 2023, they flew me to Boston to explain the community to execs & VPs. Halfway through my presentation, they cut me off to demand to know how we monetize and then they pivoted into speculation on how we would monetize. There was zero interest in the idea that a healthy community supported a strong ecosystem from which the entire value of the company and product was derived. None. If the community didn’t literally hand them dollars, then they didn’t want to invest in supporting it."

I find that astonishing. Puppet is such a complex piece of configuration management software and has so many community-developed tools supporting it (think about g10k) that a total neglect of everything the community has achieved is mind-blowingly short-sighted. Puppet itself doesn't manage to keep up with all the tools they have released in the past. The ways of tools like Geppetto or the Puppet Plugin for IntellJ IDEA speak for themselves. Promising a fully-fledged Puppet IDE based on Eclispe and then letting it rot? No official support from "Puppet by Perforce" for one of the most used and commercially successful integrated development environment (IDE)? Wow. This is work the community contributes. And as we now know Puppet gives a damn about that. Cool.

EDIT November 13th 2024: I forgot to add some important facts regarding Puppets' ecosystem and the community:

- The official Puppetserver and PuppetDB container images were abandoned by Puppet Inc. in 2023 (source: A Dockerhub search for Puppet). Yes, you read that right. Puppet decided they don't have to offer official Puppet container images themselves. Effectively abandoning the most important trend/technology in IT in the last 10 years? Instead Martin Alfke's Betadot GmbH made sure to provide them (I can wholeheartedly recommend his Puppet Trainings and Services) . They are now available as voxpupuli/puppetserver and voxpupuli/puppetdb.

- The PuppetBoard, a web dashboard granting you insight into all the facts and values the Puppet Agent gathers from all it's clients and stores in the PuppetDB? Maintained by the voxpupuli community.

- It's a somewhat ironic/sarcastic joke that many customers instead use Foreman for this. The Foreman which RedHat contributes their in-house developers to, to work on Foreman.

- The PuppetForge? The repository serving the Puppet Modules for the Puppetserver. Yes, the puppet_forge Ruby Gem is maintained by Puppet (ia-content is Puppets' Information Architecture Content Team) - but if you want something more user-friendly like a WebFrontend? You are out of luck. Created by someone, but unmaintained for 10 years. Partly because the maintainer got kicked out of the GitHub organization - which shows another problem with company-run projects if the knowledge/workload isn't properly distributed.

- Recently a fork named gorge (PuppetForge written in Go) was created but it's too early to say anything about that. Also I'm unsure if it will contain a WebFrontend allowing easy administration access for people not that well-versed in Ruby/Git/Puppet.

I consider at least the container images to be of utmost priority for many customers. And neglecting all important tools around your core-product isn't going to help either. These are exactly the type of requirements customers have/questions they ask.

- How can we run it ourself? Without constantly buying expensive support.

- How long will it take until we build up sufficient experience?

- What technology knowledge do our employees need in order to provide a flawless service?

- How can we ease the routine tasks? / What tools are there to help us during daily business?

Currently Puppet has a steep learning curve which is only made easier thanks to the community. And now we know Perforce doesn't see this as any kind of addition to their companies' value. Great.

EDIT END

The most shocking part for myself was: That the Apache 2.0 license doesn't require that the source code itself is available. Simply publishing a Changelog should be enough to stay legally compliant (not talking about staying morally compliant here...). And as he pointed out in another blogpost from November 8th, 2024 there is reason they cannot change the license (emphasizes by me to match the authors'):

"But here’s the problem. It was inconsistently maintained over the history of the project. It didn’t even exist for the first years of Puppet and then later on it would regularly crash or corrupt itself and months would go by without CLA enforcement. If Perforce actually tried to change the license, it would require a long and costly audit and then months or years of tracking down long-gone contributors and somehow convincing them to agree to the license change.

I honestly think that’s the real reason they didn’t change the license. Apache 2 allows them to close the source away and only requires that they tell you what they changed. A changelog might be enough to stay technically compliant. This lets them pretend to be open source without actually participating."

I absolutely agree with him. They wanted to go closed-source but simply couldn't as previously they never intended to. Or as someone on the internet said: "Luckily the CLA is ironclad." So instead they did what was possible and that is moving the source-code to an internal repository. Using that as the source for all official Puppet packages - but will we as Community still have access to those packages?

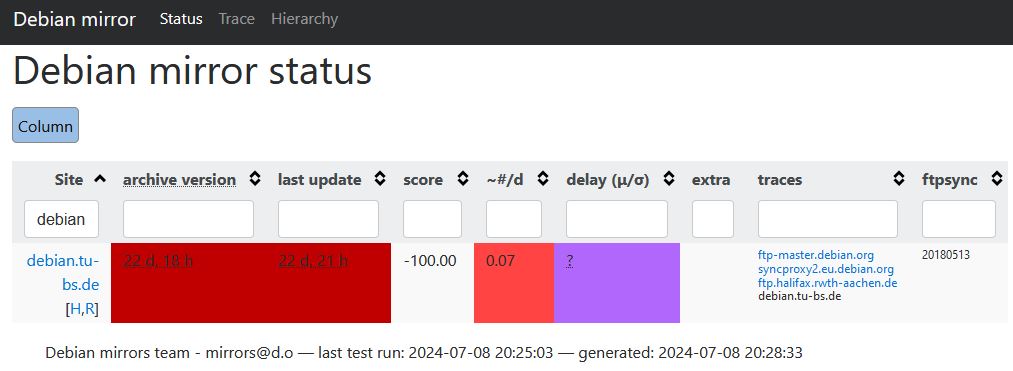

For me, based on how the blogpost is written, I tend to say: No. Say goodbye to https://yum.puppetlabs.com/ and https://apt.puppetlabs.com/. Say goodbye to an easy way of getting your Puppet Server, Agent and Bolt packages for your Linux distribution of choice.

Update 15th November 2024: I asked Ben Ford in one of his Linkedin posts if there is a decision regarding the repositories and he replied with: "We will be meeting next week to discuss details. We'll all know a bit more then." As good as it is that this topic isn't off the table it still adds to the current uncertainty. Personally I would have thought that those are the details you finalize before making such an announcement.. But ah well, everyone is different.. Update End

A myriad of new problems

This creates a myriad of new problems.

1. We will see a breakline between "Puppet by Perforce"-Puppet packages and Community-packages in technical compatibility and, most likely, functionality too.

This mostly depends on how well Puppet contributes back to the Open Source repository and/or how well-written the Changelog is and regarding that I invite you to check some of their release notes for new Puppet server versions (Puppet Server 7 Release Notes / Puppet Server 8 Release Notes) although it got better with version 8... Granted they already stated the following in their blogpost (emphasizes by me):

We will release hardened Puppet releases to a new location and will slow down the frequency of commits of source code to public repositories.

This means customers using the open source variant of Puppet will be in somewhat dangerous waters regarding compatibility towards the commercial variant and vice-versa. And I'm not speaking about the inter-compatibility between Puppet servers from different packages alone. Things like "How the Puppet CA works" or "How are catalogues generated" etc. Keep in mind: Migrating from one product to the other can also get significantly harder.

This could even affect Puppet module development in case the commercial Puppet server contains resource types the community based one doesn't or does implement them slightly different. This will affect customers on both sides badly and doesn't make a good look for Puppet. Is Perforce sure this move wasn't sponsored by RedHat/Ansible? Their biggest competitioner?

2. Documentation desaster

As bad as the state of Puppet documentation is (I find it extremely lacking in every aspect) at least you have one and it's the only one. Starting 2025 we will have two sets of documentation. Have fun working out the kinks..

Additionally documentation wont get better. Apparently this source states that "Perforce actually axed our entire Docs team!" How good will a Changelog or the documentation be when it's nobody's responsibility?

3. Community provided packages vs. vendor packages

Nearly all customers I worked at have some kind of policy regarding what type of software is allowed on a system and how that is defined. Sometimes this goes so far as "No community supported software. Only software with official vendor support". Starting 2025 this would mean these customers would need to move to Puppet Enterprise and ditch the community packages. The problem I foresee is this: Many customers already use Ansible in parallel and most operations teams are tired having to use two configuration management solutions. This gives them a strong argument in favour of Ansible. Especially in times of economic hard-ship and budget cuts.

But again: Having packages from Puppet itself at least makes sure you have the same packages everywhere. In 2025 when the main, sole and primary source for those packages goes dark numerous others are likely to appear. Remember Oracles' move to make the Oracle JVM a paid-only product for commercial use? And how that fostered the creation of dozens of different JVMs? Yeah, that's a somewhat possible scenario for the Puppet Server and Agent too. Although I doubt we will ever see more than 3-4 viable parallel solutions at anytime given the amount of work and that Puppet isn't that widely required as a JVM is. Still this poses a huge operational risk for every customer.

4. Was this all intentional?

I'm not really sure if Perforce considered all this. However they are not stupid. They sure must see the ramifications of their change. And this will lead to customers asking themself ugly questions. Questions like: "Was this kind of uncertainty intentional?" This shatters trust on a basic level. Trust that might never be retained.

5. Community engagement & open source contributors

Another big question is community engagement. We now have a private equity company which thinks nothing about the community and the community knows this. There is already a drop in activity since the acquisition of Puppet by Perforce. I think this trend will continue. After all, with the current situation we will have a "We take everything from the community we want, but decide very carefully what and if we are giving anything back in return." This doesn't work for many open source contributors. And it is the main reason why many will view Puppet as being closed source from 2025 onward. Despite being technically still open source - but again the community values moral & ethics higher than legal correctness.

So, where are we heading?

Personally I wouldn't be too surprised if this is the moment where we are looking back to in the future and say: "This is the start of the downfall. This is when Puppet became more and more irrelevant until it's demise." As my personal viewpoint is that Puppet lacked vision and discipline for some years. Lot's of stuff was created, promoted and abandoned. Lot's of stuff was handed-over to the community to maintain it. But still the ecosystem wasn't made easier, wasn't streamlined. Documentation wasn't as detailed as it should be. Tools and command-line clients lacked certain features you'd have to work around yourself. And so on.. I even ditched Puppet for my homelab recently in favour of Ansible. The overhead I had to carry out to keep it running, the work on-top which was generated by Puppet itself, just to keep it running. Ansible doesn't have all of that.

In my text Get the damn memo already: Java11 reached end-of-life years ago I wrote:

it the attention it deserves. If you don't a about your . And those assumptions won't be .

And this includes the community around your product. Especially more so for open source software.

Second I won't be surprised if many customers don't take the bite, don't switch to a commercial license, ride Puppet as long as it is feasible and then just switch to Ansible.

Maybe we will also see a fork. Giving the community the possibility to break with Puppet functionality. Not having to maintain compatibility any longer.

Time will tell.

New developments

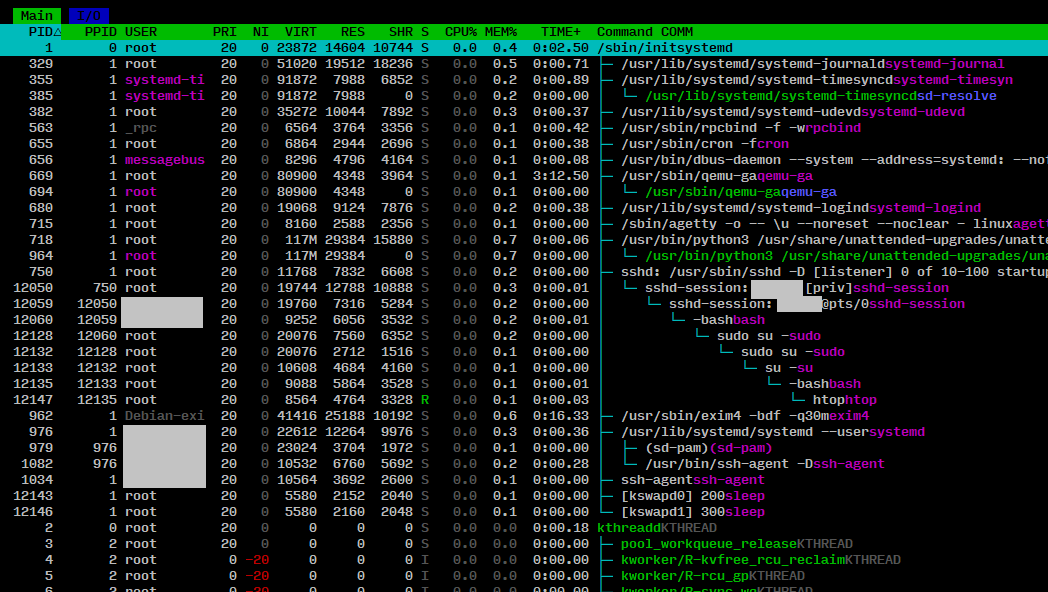

This was added on November 15th: There are now the first commnity-build packages available. So looks like a fork is happening.

Read: https://overlookinfratech.com/2024/11/13/fork-announce/

EDIT: A newer post regarding the developments around the container topic and fork is here: Puppet is dead. Long live OpenVox!

Click to enlarge in a new window.

Click to enlarge in a new window.